Is White Space Tokenization Enough?

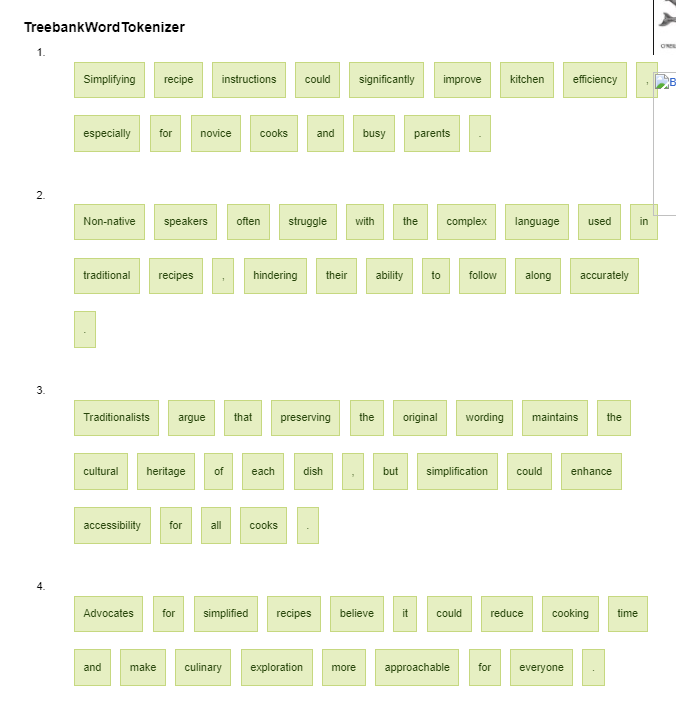

Sample Sentences (produced by chatGPT): Simplifying recipe instructions could significantly improve kitchen efficiency, especially for novice cooks and busy parents. Non-native speakers often struggle with the complex language used in traditional recipes, hindering their ability to follow along accurately. Traditionalists argue that preserving the original wording maintains the cultural heritage of each dish, but simplification could enhance accessibility for all cooks. Advocates for simplified recipes believe it could reduce cooking time and make culinary exploration more approachable for everyone.

The tokenizers handle the text fairly similarly but they do have their slight differences for example the Treebankword Tokenizer include non-native in one but doesn’t include , in dish while WordPunct Tokenizer doesn’t include either and separates non – native. However Whitespace Tokenizer includes non-native in one and dish, in one along with all periods in the last word. Spaces are sufficient in my opinion to tokenize English text as I think they separate the important things in the English language and if you look at the tokenized examples I think that it shows that the words can be tokenized well by just spaces that way the , and . are not tokens.

Try out a CALL Tool

The CALL tool that I chose to try out was DuoLingo. After spending some time trying out the tool I found out the type of feedback. It gives immediate feedback following every exercise and was specific to the exercise not just broad tips. DuoLingo does allow for multiple correct answers in my experience when it came to cloze questions. DuoLingo allows its users to work on various skills in the language from listening, speaking, reading and writing. To motivate the users it uses game like elements including points and streaks. This approach makes it engaging and enjoyable.